Multi-Modal Multi-Hop Source Retrieval using Graph Convolutions

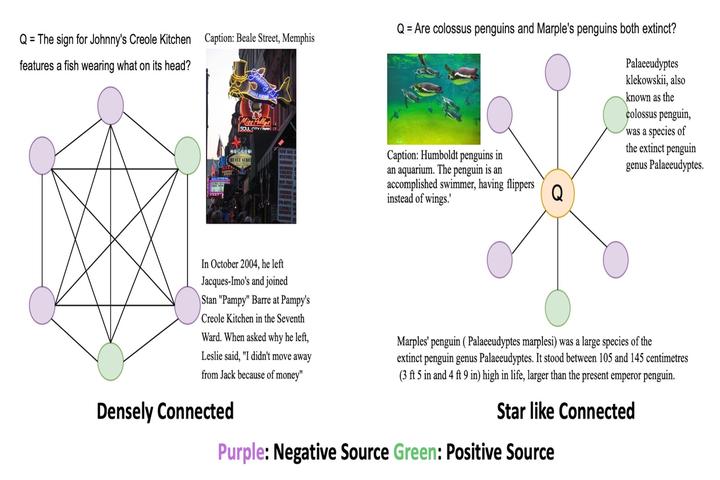

Natural answers to general questions are often generated by aggregating information from multiple sources. However, most question answering approaches assume a single source of information (usually images or text). This is a weak assumption as information is often sparse and scattered. Next, the source of information can also be vision, text, speech, or any other modality. In this work, we move away from the simplistic assumptions of VQA and explore methods that can effectively capture the multimodal and multihop aspects of information retrieval. Specifically, we analyze the task of information source selection through the lens of Graph Convolution Neural Networks. We propose three independent methods which explore different graph arrangements and weighted connections across nodes. Our experiments and corresponding analysis highlight the prowess of graph-based methods in performing multihop reasoning even with primitive representations of input modalities. We also contrast our approach with existing baselines and transformer-based methods which by design fail to perform multihop reasoning for source selection task.