Image credit: Unsplash

Image credit: Unsplash

Abstract

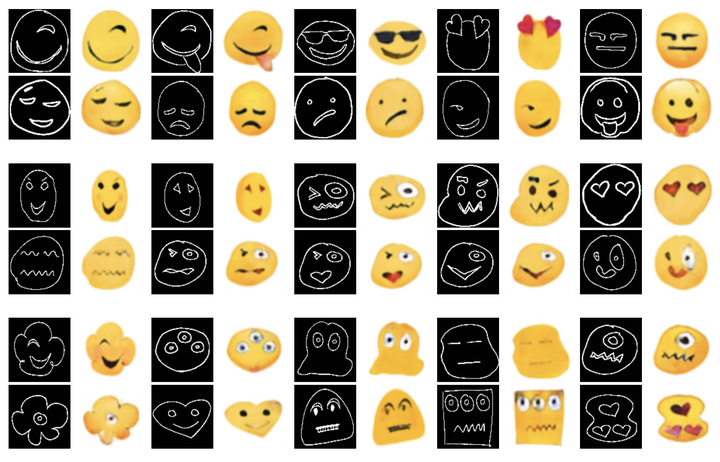

Emojis have changed the way humans communicate today. They are the most convenient non-linguistic social cues available to us in this era of social media. But there is no methodology wherein users can creatively interact with their systems to generate personalised emojis or edit existing ones. While there have been some experiments that enable networks to create images, there is no comprehensive solution that gives users the control to create personalised emoticons. In this work, we propose an end-to-end architecture to create a realistic emoji from a roughly drawn sketch. Our generated emojis show a PSNR value of 20.30dB and a SSIM of 0.914. Additionally, we look at a multi-modal architecture which generates an emoji when given an incomplete sketch along with a handwritten word describing the associated emotion.